Explainable, Trustworthy, and Reproducible AI

At NGI, we emphasise the importance of explainable, trustworthy, and reproducible AI in geotechnical engineering. Machine learning models must be transparent and interpretable to ensure engineers can trust the results and make informed decisions based on AI-driven insights.

This is particularly crucial in geotechnics, where safety, reliability, and regulatory compliance are essential.

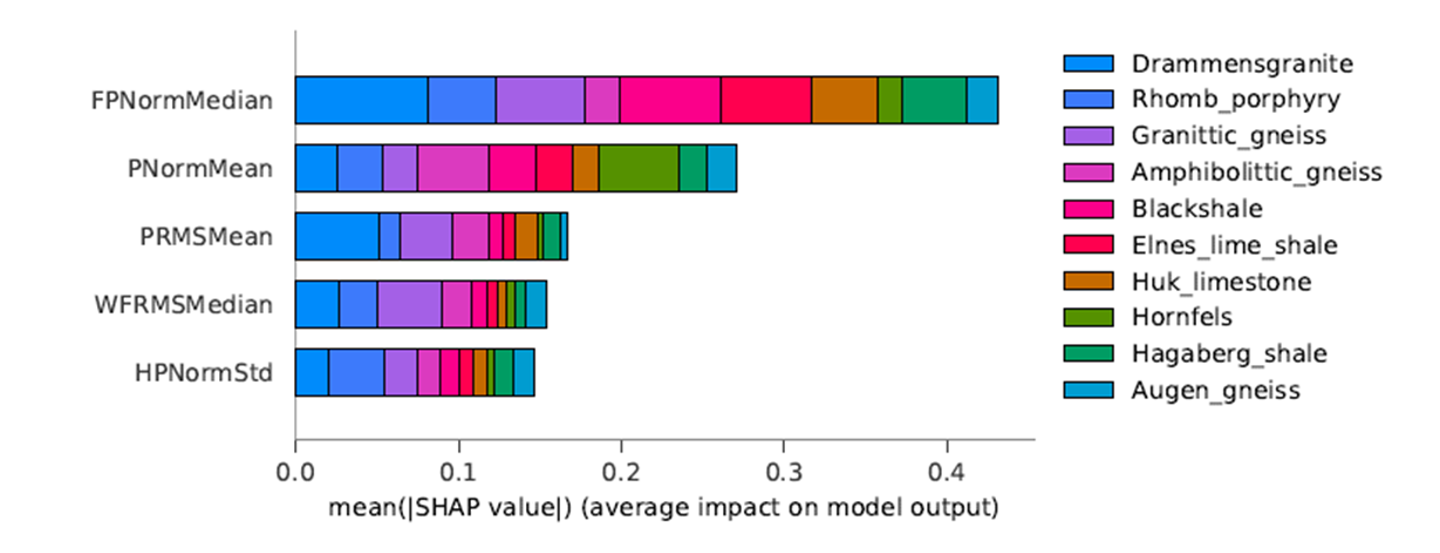

We follow best practices for ensuring model explainability, including feature importance analysis, interpretable model selection, and rigorous validation. By applying techniques such as SHAP values and partial dependence plots, we provide insights into how ML models make predictions, ensuring that they align with geotechnical knowledge and domain expertise.

Reproducibility is a key focus in our ML research and applications. We prioritise well-documented workflows, version control, and data management to ensure that ML experiments can be replicated and validated by other researchers and industry professionals. Our commitment to transparent and reproducible ML aligns with international best practices in AI-driven decision support.

NGI has developed and published a set of best practices to support trustworthy AI in geotechnics, guiding ML practitioners in structuring their workflows from data preprocessing to model validation and deployment. These best practices ensure that ML applications in geotechnics are robust, interpretable, and aligned with engineering standards.

Figure 5. Shapley values illustrate important features in predicting rocktype from drilling data. Intuitive relationships increase the trustworthiness of models. The abbreviations used in the figure are FP=Feeder pressure, P=Penetration rate, WF=Water flow, and HP=Hammer pressure.